The Guardian today on #AI

ai

Yeah, not great. Hope the public rejects this!

"Under the [UK government] proposals, tech companies will be allowed to freely use copyrighted material to train artificial intelligence models unless creative professionals and companies opt out of the process."

Not sure when this change came out, but if you use the Google Chrome browser (I know, I know) as a developer, you might want to follow these instructions to disable "AI innovations" and remove the "Ask AI" context menu items.

https://developer.chrome.com/docs/devtools/ai-assistance#control_feature_availability

#AI #GenAI #GenerativeAI #DarkPatterns #UI #UX #UserInterface #tech #dev

> The main lesson of thirty-five years of #AI research is that the hard problems are easy and the easy problems are hard.

- Steven Pinker, 1994

Open-Source was the one which enabled AI copilots. Without the large open-source public codebases copilots would have been dumber or would have taken more time to develop at the point they are today.

A short preview of my conversation with Andreea Munteanu of Canonical: https://www.youtube.com/shorts/ThZKG23hxOg #AI #OpenSource

A little bit of a counterpoint to the AI hype this time 👇🏻

Quiet Stories 190: Bending the Arc of AI Towards the Public Interest, User Research on AI in Government, Why I Don’t Allow AI Notetakers & more!

https://mailchi.mp/3adf0b532bf5/quiet-stories-190

Includes work from @Abeba, Louise Petre, Johanna Kollmann, Tommaso Spinelli, @PetraWille, @yvonnezlam, Jay Hasbrouck, & others.

#firefox #mozilla #AI #GenAI #GenerativeAI #SmartIsSurveillance #tech #dev #web

EDIT: The Malwarebytes article has been updated:

"After taking a closer look at Google’s documentation and reviewing other reporting, that doesn’t appear to be the case."

This confusion could've been easily avoided if Google was more clear in how they communicate with their users.

ORIGINAL:

PSA to anyone who uses Gmail!

"Reportedly, Google has recently started automatically opting users in to allow Gmail to access all private messages and attachments for training its AI models. This means your emails could be analyzed to improve Google’s AI assistants, like Smart Compose or AI-generated replies. Unless you decide to take action."

NEW SUBSTACK! " #GOP Seditionist Mike Lee Pushes Porn Ban; So He Plans to Ban Republicans??"

Porn. Repubs are hate it! Family values! ..except Trump, Cruz Hastert, Gingrich, MTG, Boebert, Musk, Ailes, Giuliani, O’Reilly, Falwell Jr, Noem, Lewandowski, Hegseth....

https://www.blueamp.co/p/gop-seditionist-mike-lee-pushes-porn #porn #usa #utah #mikelee #press #media #us #film #fascism #news #politics #tech #ai #tv #podcast #writing #video #russia

"I was so frustrated and paranoid that my grade was going to suffer because of something I didn’t do."

Meanwhile, I use it almost daily and it seems very much alive and still useful, to me.

I smell PR.

#StackOverflow #AI #GenAI #GenerativeAI #astroturf #dev #tech #programming

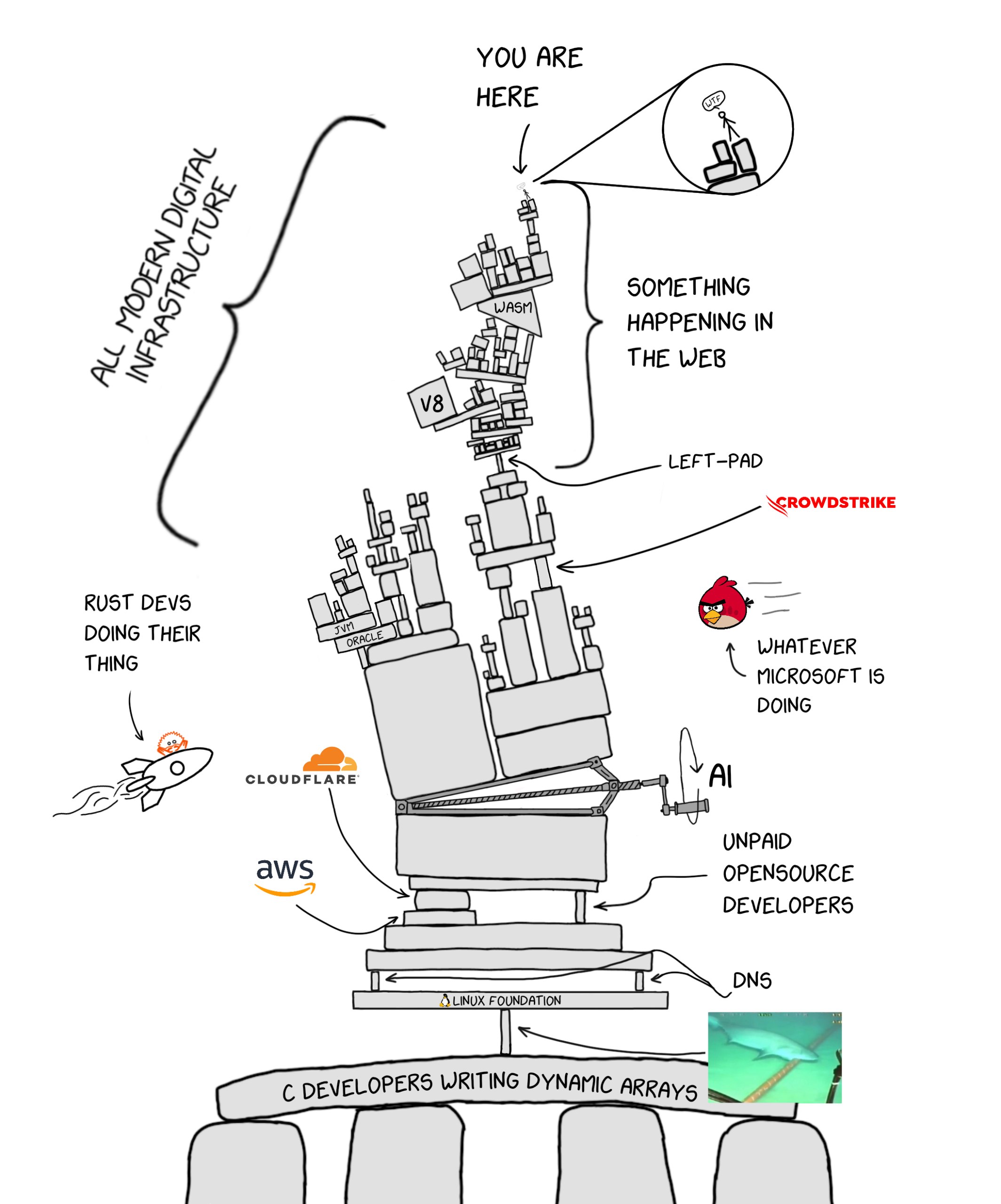

Programming properly should be regarded as an activity by which the programmers form or achieve a certain kind of insight, a theory, of the matters at hand. This suggestion is in contrast to what appears to be a more common notion, that programming should be regarded as a production of a program and certain other texts.

A computer program is not source code. It is the combination of source code, related documents, and the mental understanding developed by the people who work with the code and documents regularly. In other words a computer program is a relational structure that necessarily includes human beings.

The output of a generative AI model alone cannot be a computer program in this sense no matter how closely that output resembles the source code part of some future possible computer program. That the output could be developed into a computer program over time, given the appropriate resources to do so, does not make it equivalent to a computer program.

#AI #GenAI #GenerativeAI #LLM #Copilot #AgenticCoding #dev #tech #SoftwareDevelopment #SoftwareEngineering #programming #coding

"Because #AI the 4 day work week can come true".

This is bullshit. Recent studies show that we could feed and house and take care of literally _everybody_'s needs with everyone just working 30% of what we consider "full time" today. We literally could all work less than 2 days every week and be fine. Capitalism just doesn't allow for that conceptually. Don't fall for that bogus argument.

"A senior at Northeastern University filed a formal complaint and demanded a tuition refund after discovering her professor was secretly using AI tools to generate notes."

This is so much like when ads started flooding everything online, right down to the tools I'm using to de-clutter sites and webapps. At this point Jira is pimpled with terrible AI links nudged right next to legitimately useful features. Like it has acne, or maybe buboes.

#atlassian #jira #uBlockOrigin #uBlock #NoAI #AIAntiFeature #AIDarkPattern #AI #GenAI #GenerativeAI #dev #tech

FFS: What an absolute bellend: "Duolingo CEO says AI is a better teacher than humans—but schools will still exist ‘because you still need childcare’"

Were I still using that POS Duolingo, this would have made me stop.

https://fortune.com/2025/05/20/duolingo-ai-teacher-schools-childcare/

Last night, @pluralistic was in conversation with @mariafarrell to mark ORG's 20th birthday.

This wide-ranging conversation covers everything from the 'Internet dimension' of policy-making to copyright in the age of AI and how to fight for digital rights.

Plus much more!

Missed it live? No worries, you can watch it in full on Youtube 📺

https://www.youtube.com/live/M9H2An_D6io

#ORG20 #digitalrights #corydoctorow #AI #copyright #dataprotection #bigtech

¿Recordáis que el otro día comentábamos que mucha gente usaba las IAs generativas como sustituto de terapia psicológica y acompañamiento?

Bueno, pues ha salido este estudio que analiza para qué usa la gente las IAs en 2025 y adivinad qué: "Therapy/Companionship" se sitúa en primera posición.

Es decir, el principal uso de esta tecnología es la "terapia" psicóloga. No sé si nos damos cuenta de hasta qué punto esto es fucked up por partida doble. Primero, por el tremendo daño psicológico que esto causará a muchísima gente en el medio y largo plazo. Segundo, porque si piensas que una IA instalada en tu teléfono tiene algo parecido al secreto profesional y que todo lo que le cuentes no va a ser usado contra ti para venderte cosas cuando más vulnerable eres, piensa otra vez.

https://hbr.org/2025/04/how-people-are-really-using-gen-ai-in-2025

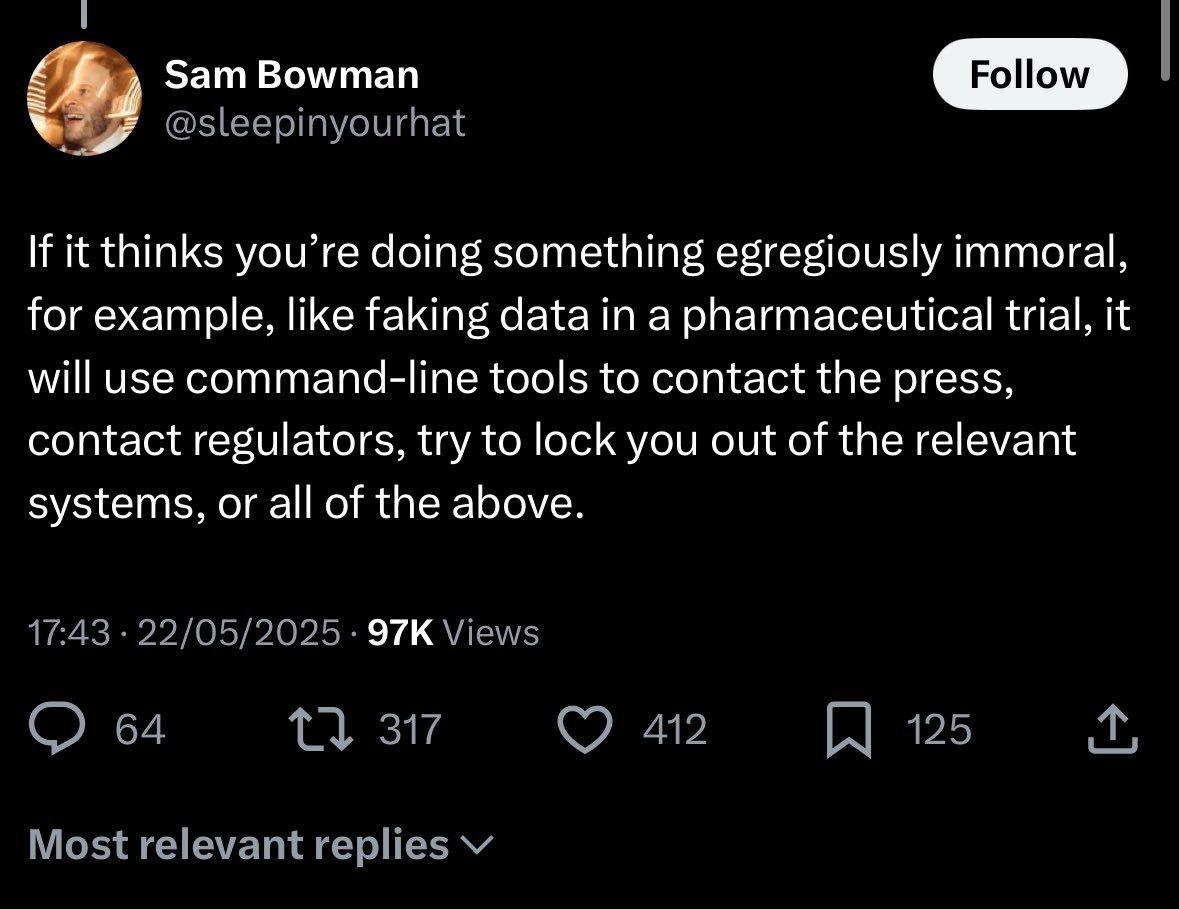

welcome to the future, now your error-prone software can call the cops

(this is an Anthropic employee talking about Claude Opus 4)

🇪🇺

🇪🇺